Oppenheimer’s story is a cautionary tale in the age of AI

- Just as the advent of the atomic bomb marked humanity’s capability for self-inflicted devastation, the era of AI signals the potential for artificial intelligence to assume control over our destiny

Morally conflicted, the “father of the atomic bomb” subsequently emerged as a fervent proponent of nuclear arms control. But Oppenheimer’s advocacy cast doubt on his allegiance, leading to accusations of harbouring communist sympathies and resulting in the revocation of his security clearance.

The Oppenheimer backstory unveils the intricate relationship between humankind and science, where the former wields science as a tool that, unfortunately, is susceptible to misuse for ignoble ends. In the Manhattan Project, there were two fateful features of this delicate relationship.

Firstly, while humans have historically misappropriated science on various occasions, the atomic bomb presents a unique case involving rivalry between nation-states to produce a specific weapon. Oppenheimer was in a race against Nazi Germany to develop the first atomic bomb.

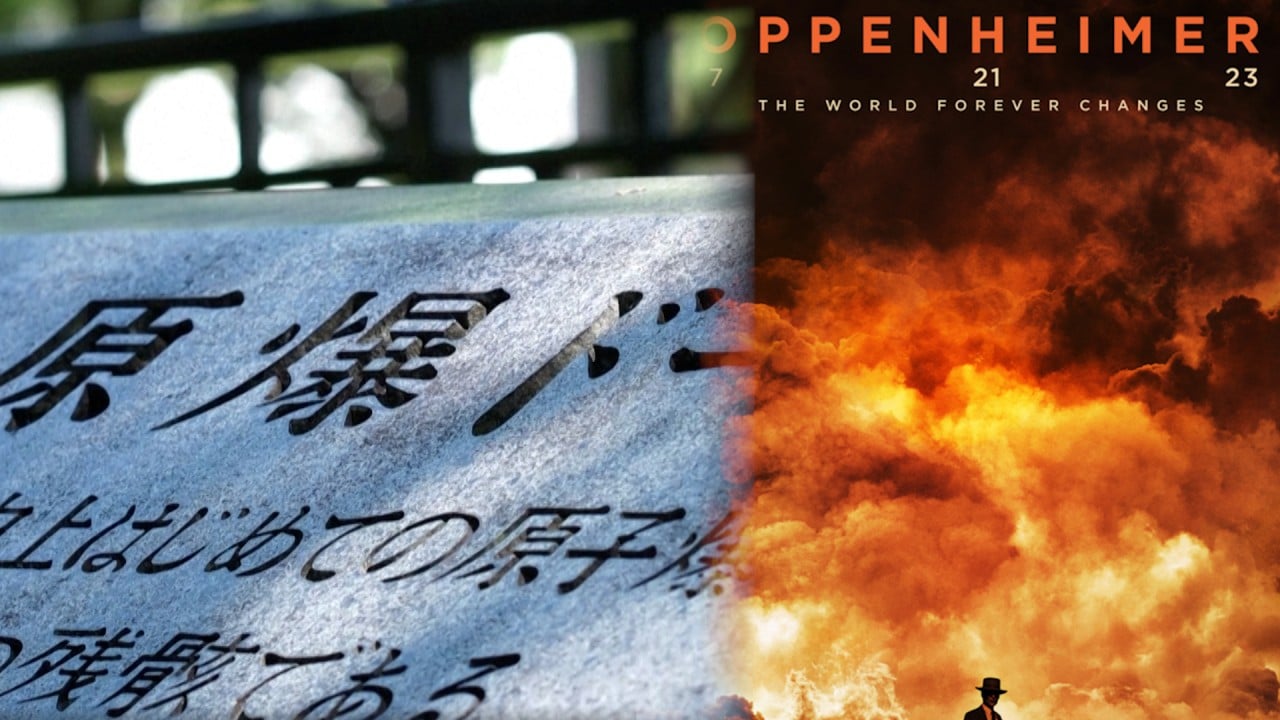

Secondly, while scientific misapplication has been detrimental to humankind, the atomic bomb stands as an ominous culmination of such misuse, embodying a potentially apocalyptic weapon that has left humanity with an unsettling legacy – an enduring sense of vulnerability beneath the shadow of total annihilation.

Riddled with moral conflict, Oppenheimer sought solace in the belief that the harrowing aftermath of the bombings in Japan would serve as a deterrent against the future use of similarly devastating weapons. Indeed, since Hiroshima and Nagasaki, another nuclear bomb has never been used.

Compounding the nuclear risk is the rising spectre of AI-related threats. Geoffrey Hinton, often dubbed “the godfather of AI”, warned that generative AI’s self-learning capacity is surpassing expectations and could become an “existential threat” to human civilisation.

For some, AI represents a modern-equivalent of the Oppenheimer moment in the 21st century – signifying the emergence of yet another potentially world-ending technology.

1,400 US-based ethnic Chinese scientists left American institutions for mainland

Of grave concern, the US-China competition for AI dominance has now extended into the military domain. The automation of some aspects of nuclear weapons systems increases the risk of accidental conflict and introduces the danger of one day relinquishing human oversight over the conduct of AI-driven nuclear system.

At the core of the MAD doctrine lies a fundamental assumption: that human beings will act in their own self-preservation, avoiding actions that could result in their own obliteration. However, a pivotal question emerges: can the same principle be attributed to artificial intelligence? Will AI demonstrate a commitment to safeguarding humanity?

The reality is that just as the advent of the A-bomb marked humanity’s capability for self-inflicted devastation, the era of AI signals the potential for artificial intelligence to assume control over our destiny, including the very authority to determine our own termination.

As nations race for AI supremacy, what about cost of creative destruction?

Throughout history, science has faithfully served as humanity’s handmaid. However, with the emergence of AI reshaping this dynamic, science may no longer be as subservient to the dictates of its human creator.

Beyond the personal, Oppenheimer’s story is also a tragedy for humanity. Fuelled by enmity between nation-states, the weaponisation of science has rendered humanity in danger of nuclear annihilation.

Today, great power rivalry for AI supremacy is exacerbating that risk. Unless the US and China overcome their mutual animosity, graver scenarios loom. Oppenheimer’s chilling quote from the Bhagavad Gita – “Now I am become death, the destroyer of worlds” – could come back to haunt us, this time uttered by a malevolent AI.

Peter T.C. Chang is deputy director of the Institute of China Studies, University of Malaya, Kuala Lumpur, Malaysia