World cannot afford AI cooperation falling prey to US-China geopolitical strife

- Geopolitics is a threat to international cooperation on AI legislation, resulting in patchwork strategies, competing goals and disjointed global standards. The ongoing chip war between the US and China could make things worse, leading to uneven enforcement and unequal protection

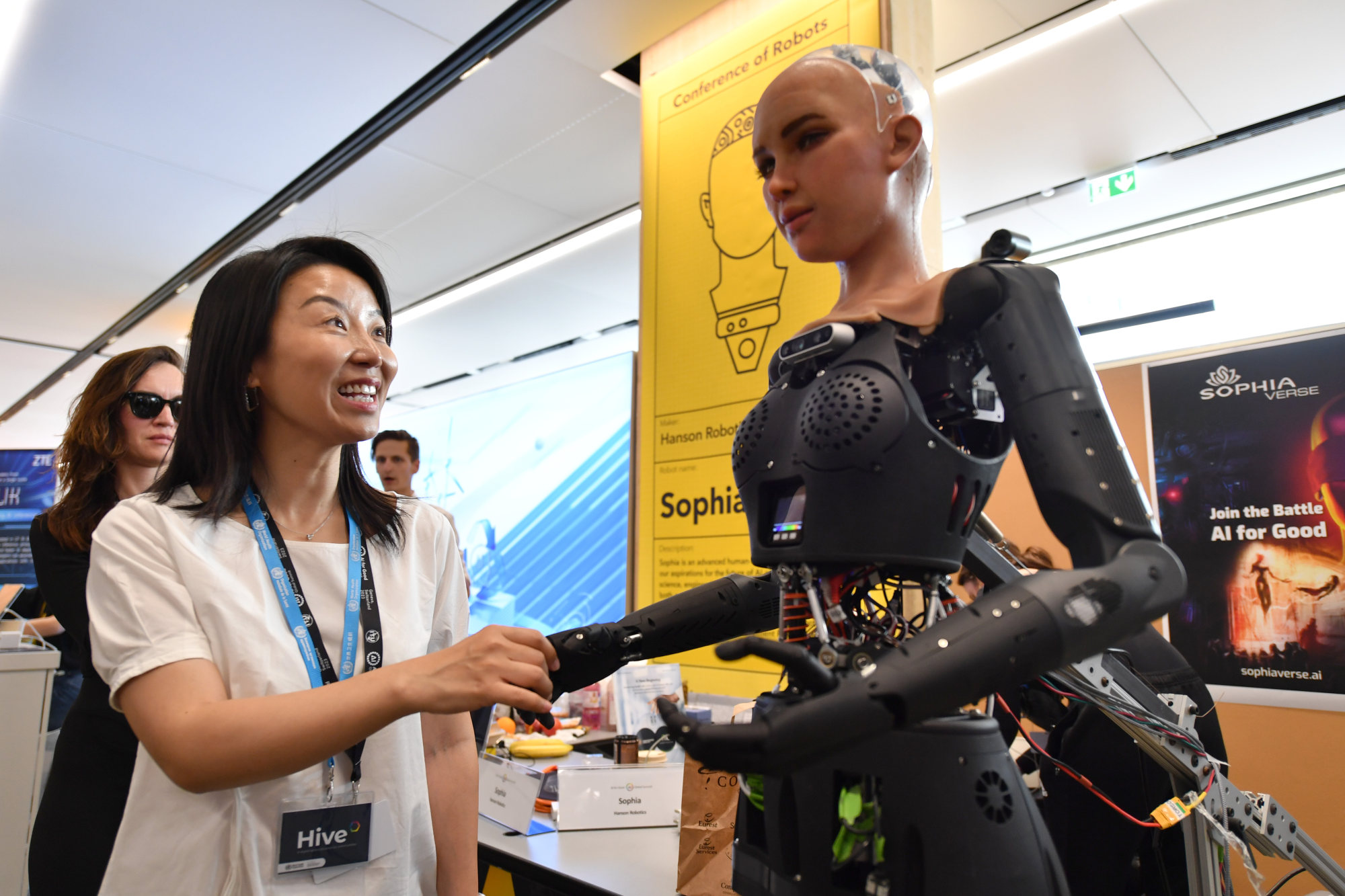

While many countries are taking policy action on AI, there are differences in the scope of rules, with some nations focusing on certain AI systems while others are adopting more comprehensive measures. The emphasis on security, justice, openness and human scrutiny is crucial to avoid bias and govern these AI systems.

The geopolitical divisions were evident in the two events, with the Geneva event mostly having speakers from the West while the Shanghai conference had mostly speakers from China and its allies. The UK is planning to host a global AI summit this fall.

Inadequate protections could deepen inequality and cause moral dilemmas. ITU deputy secretary general Tomas Lamanauskas said, “It is up to us to make sure the good prevails over the risky, and that we leverage AI to help rescue the sustainable development agenda and save our planet.”

Establishing an environment for exchanging best practices and discussing ethical issues by cultivating trust is essential for the world to start converging in framing global rules of engagement and ensuring AI that upholds justice, respects human rights and minimises risks.

Stronger support is needed to bring nations together to make unified legislation to regulate AI, promote cooperation, share information and exchange resource via multilateral agreements, treaties and global standards. Developing ethical and responsible management of AI requires initiation of a global AI governance structure with the participation of stakeholders from across the world.

EU wants AI Act to be global benchmark, but Asian countries are not convinced

The world faces two stark choices. Either we can let tech giants control our fate, or the global community comes together to harness the power of AI to improve everyone’s lives. Responsible navigation of AI’s rapid growth requires addressing ethical concerns, ensuring accountability and promoting collaboration and cooperation.

Getting the most out of AI that benefits everyone is not exclusive to retaining the core principles of developing unified international legislation, multilateral agreements and global governance standards. As Bogdan-Martin warned, “We’re running out of time”. The stakes could not be higher for the global community to act quickly, collectively and decisively on AI.